Imagine you are teaching a friend how to solve math problems. You don't want to just give them a bunch of problems and immediately test them on those exact problems, right? Instead, you would teach them using some problems (training), and then give them new problems they haven't seen before to see if they have really learned (testing). 😊 This is essentially what we do with machine learning models.

Why do we split the dataset?

- We split the dataset into two parts: a training set and a testing set. The training set is used to train the machine learning model, while the testing set is used to evaluate how well the model performs on new, unseen data. 📊

How does it work?

Let's say you have a dataset of 1000 images of cats and dogs, and you want to build a machine learning model to classify them. You might split this dataset into 800 images for training (to teach the model) and 200 images for testing (to evaluate how well it learned). 🐱🐶

The training set is used to adjust the model's parameters so that it learns to classify images correctly. The testing set is then used to see how well the model can classify new images it hasn't seen before. If the model performs well on the testing set, it suggests that it has learned effectively from the training data. 🧠💡

Why do we need it?

- We need to split the dataset to accurately assess how well our model will perform on unseen data. If we used the same data for training and testing, the model might just memorize the training examples without truly understanding the underlying patterns. This could lead to overfitting, where the model performs well on the training data but poorly on new data. Splitting the data helps us detect and prevent overfitting, ensuring that our model generalizes well to new, unseen examples. 🛡️✨

In summary, splitting a dataset into training and testing sets helps us train machine learning models effectively and evaluate their performance accurately on new data. It's like teaching someone with some examples and then testing them on new problems to see if they've truly learned. 😊👩🎓👨🎓

Let's get started on our dataset using this Python code block! 🐍

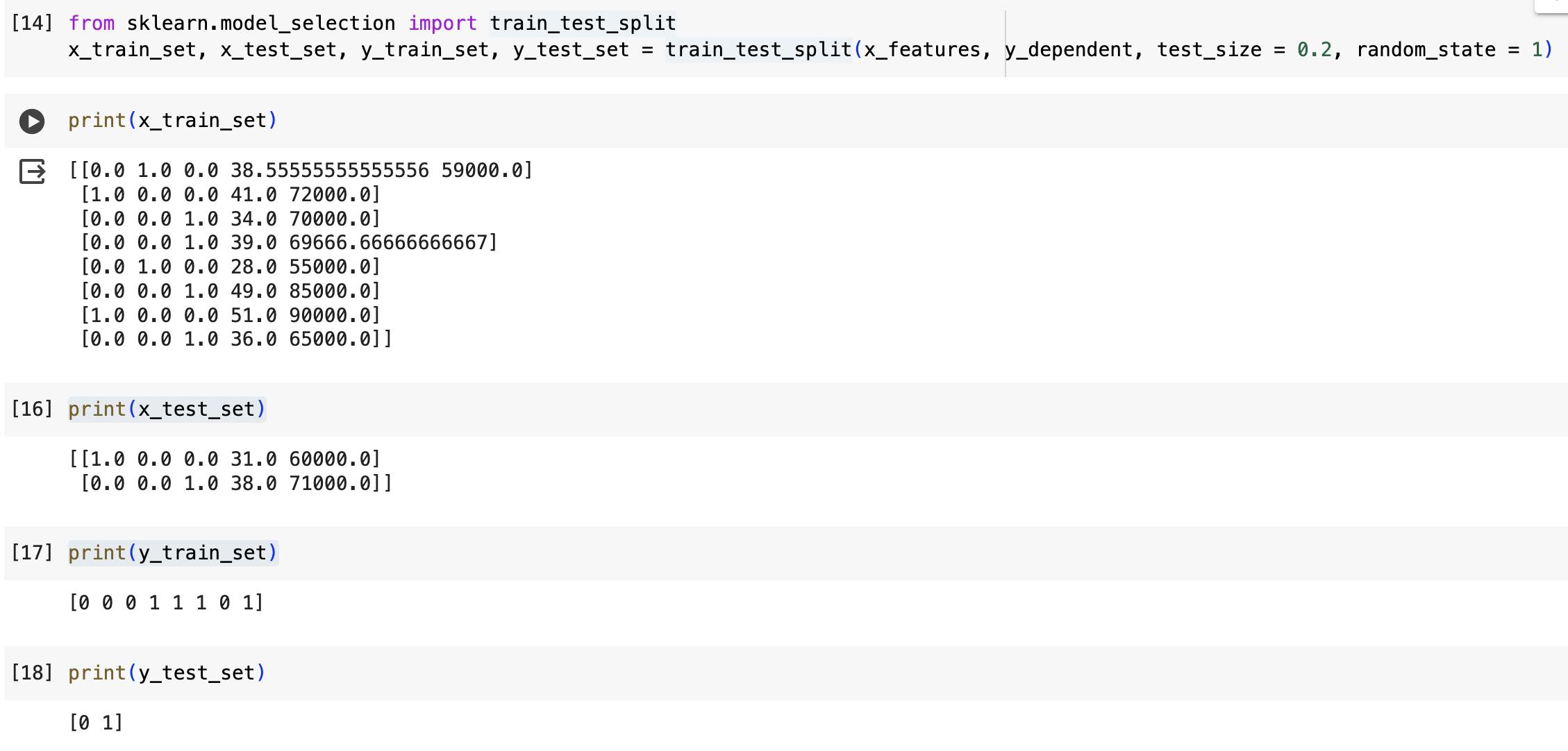

from sklearn.model_selection import train_test_split

x_train_set, x_test_set, y_train_set, y_test_set = train_test_split(x_features, y_dependent, test_size = 0.2, random_state = 1)

Now let's break down the lines of code step by step:

from sklearn.model_selection import train_test_split: This line imports a function calledtrain_test_splitfrom themodel_selectionmodule of thesklearnlibrary. This function is commonly used to split a dataset into two subsets for training and testing purposes.x_train_set, x_test_set, y_train_set, y_test_set = train_test_split(x_features, y_dependent, test_size = 0.2, random_state = 1): This line actually splits the dataset into training and testing sets. Let's break it down further:x_features: This represents the independent variables (features) of the dataset which we defined in previously and used.y_dependent: This represents the dependent variable (target) of the dataset.test_size = 0.2: This parameter specifies the proportion of the dataset that should be allocated to the testing set. In this case we consider 20% of the data will be used for testing.random_state = 1: This parameter is to ensure that the data split will be the same each time we run the code, making our results reproducible.The

train_test_splitfunction is used to split this data into four parts:x_train_set: This will hold the features used for training.x_test_set: This will hold the features used for testing.y_train_set: This will hold the corresponding target values for training.y_test_set: This will hold the corresponding target values for testing.

So, in summary, these lines of code import a function for splitting a dataset into training and testing sets, then use that function to split the given features and target variables into training and testing sets, respectively.

Here is the snippet of code execution :-

Great job! We're almost finished with Data Preprocessing. Just one more step to go: Feature Scaling, which we'll cover in the next chapter. Feel free to revisit the previous chapters in this series to refresh your memory and grasp everything fully.

And guess what? Here's the complete code we've been rocking! 🌟 You can grab it all in one neat package from my GitHub page. Just hop over here: GitHub - PythonicCloudAI/ML_W_PYTHON/Data_Preprocessing.ipynb.

Happy coding! 📥

# **Step 1: Import the necessary libraries**

import pandas as pd

import scipy

import numpy as np

from sklearn.preprocessing import MinMaxScaler

import seaborn as sns

import matplotlib.pyplot as plt

# **Step 2: Data Importing**

df = pd.read_csv('SampleData.csv')

# **Step 3 : Seggregation of dataset into Matrix of Features & Dependent Variable Vector**

x_features = df.iloc[:, :-1].values

y_dependent = df.iloc[:, -1].values

print(x_features)

print(y_dependent)

# **Step 4 : Handling missing Data**

from sklearn.impute import SimpleImputer

imputer = SimpleImputer(missing_values=np.nan, strategy='mean')

imputer.fit(x_features[:,1:3])

x_features[:,1:3] = imputer.transform(x_features[:,1:3])

print (x_features)

# # **Step 5 : Encoding Categorical Data : One Hot Encoding**

from sklearn.compose import ColumnTransformer

from sklearn.preprocessing import OneHotEncoder

column_transformer = ColumnTransformer(transformers=[('encoder',OneHotEncoder(),[0])], remainder='passthrough')

x_features = np.array(column_transformer.fit_transform(x_features))

print(x_features)

# **Step 6 : Encoding Categorical Data : Label Encoding**

from sklearn.preprocessing import LabelEncoder

label_encoding = LabelEncoder()

y_dependent = label_encoding.fit_transform(y_dependent)

print(y_dependent)

# **Step 7 : Splitting dataset into Training and Test Dataset**

from sklearn.model_selection import train_test_split

x_train_set, x_test_set, y_train_set, y_test_set = train_test_split(x_features, y_dependent, test_size = 0.2, random_state = 1)

print(x_train_set)

print(x_test_set)

print(y_train_set)

print(y_test_set)