Hey there, fellow tech enthusiasts! Today, we're diving into a topic that's crucial for ensuring our machine learning models deliver top-notch results: feature scaling! 🚀

Making sure that our machine learning models give accurate and dependable results is super important. But one thing that many people don't think about is how the size of the features in our data can affect how well our models work. This article is all about feature scaling. We'll explain it in a simple way and use examples to show why it's so important for making machine learning algorithms work better. 😊📊

Let's understand this in detail with more simple example! Imagine you have two friends, Raj and Priya. Raj measures things in kilometers, while Priya measures things in meters. If you ask them about the distance to a park, Raj might say it's 2 kilometers away, while Priya might say it's 2000 meters away. They're both talking about the same distance, but in different units. 🚶♂️🚶♀️

Now, let's picture you want to compare their measurements. To level the playing field, you decide to convert Priya's measurements to kilometers, so everything is in the same unit. This way, you can easily see who measured the distance more accurately without worrying about the different units they used. 📏✨

In the realm of machine learning, features are like Raj and Priya's measurements. They might have different scales or units. Feature scaling steps in like converting Priya's measurements to kilometers. It harmonizes all the features to use the same scale, so your machine learning model can understand and compare them better. This helps the model make fairer decisions and gives you more accurate results. 📊🎯

So now let's define what Feature Scaling is ? Feature scaling is the process of adjusting the scale or range of features in a dataset to ensure they are all on a similar scale. This helps machine learning algorithms perform better by preventing features with larger scales from dominating those with smaller scales.

Feature scaling is typically applied as a preprocessing step in machine learning workflows. Here's a simplified explanation of how it's applied:

First you need to Identify Features: First, you identify the features (or variables) in your dataset that need to be scaled.

Next Choose Scaling Method: Next, you choose a scaling method based on your dataset and the requirements of your machine learning algorithm. Common scaling methods include:

Min-Max Scaling:

Explanation: Min-Max scaling rescales features to a fixed range, commonly between 0 and 1. It subtracts the minimum value of the feature and then divides by the range (the maximum value minus the minimum value).

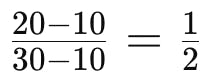

Formula:

Example: Suppose we have a feature representing temperatures ranging from 10°C to 30°C. To scale these temperatures using Min-Max scaling:

If we have a temperature of 20°C, the scaled value would be :

so the scaled value is 0.5.

When to Use: Use Min-Max scaling when you know the range of your data and want to bound it within a specific range, typically between 0 and 1.

Example Use Cases: Image processing where pixel values need to be normalized between 0 and 1, or when dealing with algorithms like neural networks with input values expected to fall within a certain range.

Standardization (Z-score Scaling):

Explanation: Standardization rescales features so that they have a mean of 0 and a standard deviation of 1. It subtracts the mean of the feature and then divides by the standard deviation.

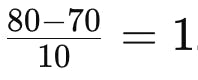

Formula:

Example: Let's say we have a feature representing exam scores with a mean of 70 and a standard deviation of 10. If a student scores 80 on the exam, the scaled value would be :

When to Use: Standardization (Z-score scaling) is useful when you want to compare different sets of data or features, especially if they have different units or scales. It's also handy when your data has outliers or follows a normal distribution (bell curve).

Example Use Cases:

Comparing test scores from different classrooms or different years.

Analyzing the performance of stocks from different industries.

Evaluating the heights and weights of individuals from different populations.

Assessing the monthly sales of products across various regions.

And that's a wrap for this article! 😊 We've thoroughly explored Feature Scaling in Machine Learning and its various types. Next up, we'll dive into the practical implementation. Until then, keep on learning and smiling! 🚀📚