5. Streamlining Your Machine Learning Journey: A Beginner's Guide to Data Preprocessing

Hey there, future data maestro! Ready to dive into the wonderful world of machine learning? Buckle up because we're about to embark on an exciting journey starting with second step as we already collected data as first step of data collection... drumroll... Data Preprocessing! 🚀

Why It's Cool:

Before we dazzle our machines with fancy algorithms, let's make sure our data is squeaky clean! That's where data preprocessing comes in. It's like giving your data a spa day, complete with massages and facials! 💆♂️✨

What's on the Agenda:

Cleaning Up: Time to bid adieu to duplicates and errors! Let's sweep away those messy bits and make our data sparkle like a freshly cleaned window. ✨🧹

Missing Data SOS: Uh-oh! Looks like some data decided to play hide-and-seek. No worries, we'll find those missing pieces and either fill them in or kindly ask them to leave. 🕵️♀️🔍

Normalize for Harmony: We're all about fairness here! Let's scale our data to a standard format, so everyone's on the same page. It's like teaching your data to speak the same language – how cool is that? 🌐📊

Why It's Essential:

Think of data preprocessing as the superhero cape for your machine learning model. The cleaner the data, the sharper the predictions! With great preprocessing comes great accuracy! 💪🎯

Our Plan: Python Power! 🐍

We're enlisting the mighty Python to tackle our preprocessing adventures. Whether you are cozying up with Google Colab or snuggling into Jupyter Notebook, the choice is yours! Plus, there are tons of tutorials to guide you along the way. It's like having a helpful sidekick by your side! 🦸♂️📚

Let's Dive In:

Armed with sample data and Python prowess, we are ready to rock this preprocessing party! Remember, there's no one-size-fits-all approach. We'll explore different options and find what works best for our data. It's all about the journey, not just the destination! 🌟🚀

So, are you ready to unleash your inner data superhero? Let's prep that data and pave the way for some mind-blowing machine learning magic! ✨🔮

Step 1: Import the necessary libraries

import pandas as pd

import scipy

import numpy as np

from sklearn.preprocessing import MinMaxScaler

import seaborn as sns

import matplotlib.pyplot as plt

Below are simplified explanations of each of those libraries:

Libraries ?? --> Let's talk about libraries real quick. 📚 In the world of programming, libraries are like toolboxes filled with ready-made code. They're like shortcuts that help us write our own programs faster! Instead of starting from scratch, we can grab pieces of code from these libraries and use them in our own projects. Plus, they're great for sharing code between different programs. It's like borrowing your friend's cool gadgets for a project! 🛠️

For example, in Python, we have awesome libraries like numpy, pandas, and matplotlib. 🐍 These libraries help us do things like crunching numbers, organizing data, and making cool charts and graphs! We just need to invite them to our coding party with a simple "import" statement, and we're good to go! 🎉

So, why are libraries important? Well, they're like superpowers for programmers! They help us write better, faster, and more organized code. Plus, they encourage us to share our code with others in the programming community. It's all about teamwork and making coding easier for everyone! 💪

Now, let's take a closer look at each of our library buddies that we have invited to our coding party:

import pandas as pd --> This line imports the pandas library and assigns it to the alias pd. Pandas is a software library for data manipulation and analysis. It provides data structures and functions needed to manipulate structured data.

import scipy --> This line imports the SciPy library. SciPy is a free and open-source Python library used for scientific computing and technical computing. It contains modules for optimization, linear algebra, integration, interpolation, special functions, FFT, signal and image processing, ODE solvers, and other tasks common in science and engineering.

import numpy as np --> This line imports the NumPy library and assigns it to the alias np. NumPy is a library for the Python programming language, adding support for large, multi-dimensional arrays and matrices, along with a large collection of high-level mathematical functions to operate on these arrays.

from sklearn.preprocessing import MinMaxScaler --> This line imports the MinMaxScaler class from the preprocessing module of the scikit-learn library. MinMaxScaler scales and translates each feature individually such that it is in the given range on the training set, e.g., between zero and one.

import seaborn as sns --> This line imports the Seaborn library and assigns it to the alias sns. Seaborn is a Python data visualization library based on Matplotlib. It provides a high-level interface for drawing attractive and informative statistical graphics.

import matplotlib.pyplot as plt --> This line imports the pyplot module from the Matplotlib library and assigns it to the alias plt. Matplotlib is a plotting library for the Python programming language and its numerical mathematics extension NumPy. pyplot is a collection of functions that provide a simple interface to Matplotlib’s object-oriented plotting API.

Once we've got our library buddies on board, it's time to bring in the actual data and start playing around with it. And guess what? That's our next step – Step 2: Data Importing! 🚀 Let's dive in 🎉

Step 2: Data Importing

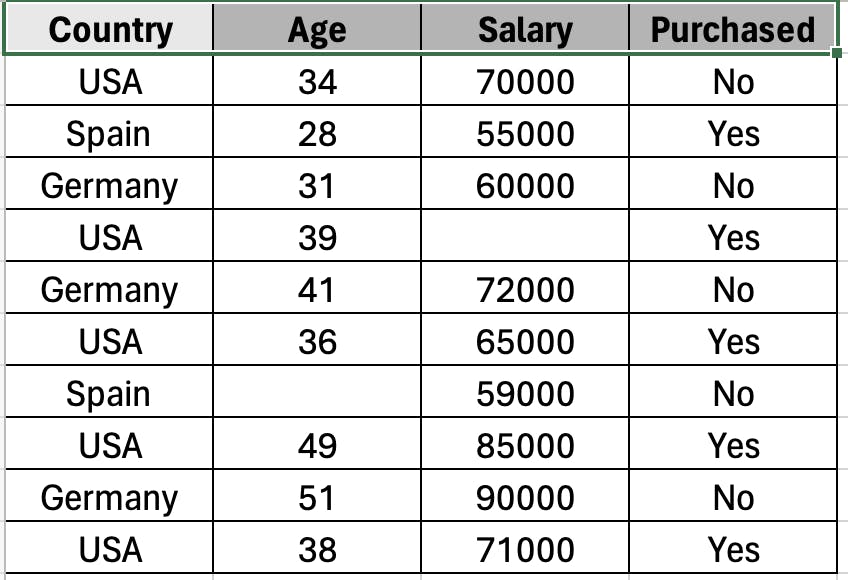

The sample dataset we are going to work on looks like this as below :-

To import the data from the file named "SampleData.csv" for use in our Machine Learning Model, we need to use the following syntax:

df = pd.read_csv('SampleData.csv')

This line of code is using the pandas library’s read_csv function to read a CSV (Comma Separated Values) file named ‘SampleData.csv’ and store the data in a DataFrame.

Here’s a breakdown:

pd: This is the alias for pandas, which was imported earlier in the code with the lineimport pandas as pd.read_csv: This is a function provided by pandas to read a CSV file and convert it into a DataFrame.'SampleData.csv': This is the name of the CSV file thatread_csvis reading. This file should be in the same directory as your Python script. If it’s in a different directory, you would need to specify the full path to the file.df: This is the variable that the DataFrame is being assigned to. After this line of code executes,dfwill contain the DataFrame created from the ‘SampleData.csv’ file, and you can usedfto refer to this DataFrame in the rest of your code.

A DataFrame is a two-dimensional labeled data structure with columns potentially of different types. You can think of it like a spreadsheet or SQL table, or a dictionary of Series objects. It is generally the most commonly used pandas object. DataFrames are great for representing real data: rows correspond to instances (examples, observations, etc.), and columns correspond to features of these instances.

And that wraps up today's blog! Pretty straightforward, right? In our next chapter, we'll dive into handling missing data in our dataset. Stay tuned for more simplified and fun lessons on our learning journey! 🚀